In this post, I will present the code to download Youtube Individual/Channel Videos step-by-step.

You can download the Videos using pytube module by passing the video URL as an argument. Just Install pytube and run as below.

python -m pip install pytube

This will download the given video URL to your current Directory, what about if you want to store your local copy for your educational purpose when you are offline.? The following will download the all the videos from the given URL to your desired location in the PC.

import requests

import re

from bs4 import BeautifulSoup

from pytube.cli import on_progress

from pytube import YouTube

from pytube import Playlist

from pytube import Channel

def get_youtube(i):

try:

yt = YouTube(i,on_progress_callback=on_progress)

yt.streams.filter(progressive=True, file_extension='mp4').order_by('resolution').desc().first().download('C:\\Users\\Dell\\Videos\\downs\\Sriram_Channel\\')

except:

print(f'\nError in downloading: {yt.title} from -->' + i)

pass

l = Channel('https://www.youtube.com/channel/UCw43xCtkl26vGIGtzBoaWEw')

for video in l.video_urls:

get_youtube(video)

You may get regular Expression error in fetching the video information due to changes implemented in the channel URL using symbols. or any other restrictions which are unsupported by the pytube module, either you have to download and install the latest or identify and fix it on your own.

To avoid this, you can use another module called scrapetube

pip install scrapetube

The following code will give you the Video id from the URL provided as input. you need to append ‘https://www.youtube.com/watch?v=’ to mark that as a complete URL.

import scrapetube

videos = scrapetube.get_channel("........t7XvGJ3AGpSon.......")

for video in videos:

print('https://www.youtube.com/watch?v='+ video['videoId'])

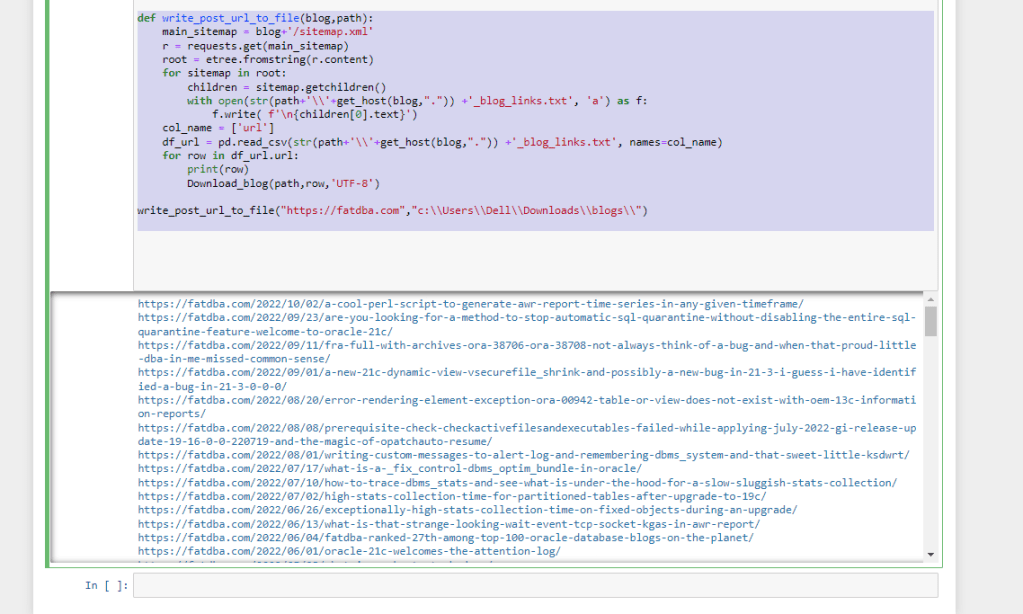

as you can see channel id is the key here, what if you are unaware of channel id.? You can get the channel id either from browser view page source or you can also use “requests” and “BeautifulSoup” module to get the channel ID

import requests

from bs4 import BeautifulSoup

url = input("Please Enter youtube channel URL ")

response = requests.get(url)

soup = BeautifulSoup(response.content,'html.parser')

print(soup.find("meta", itemprop="channelId")['content'])

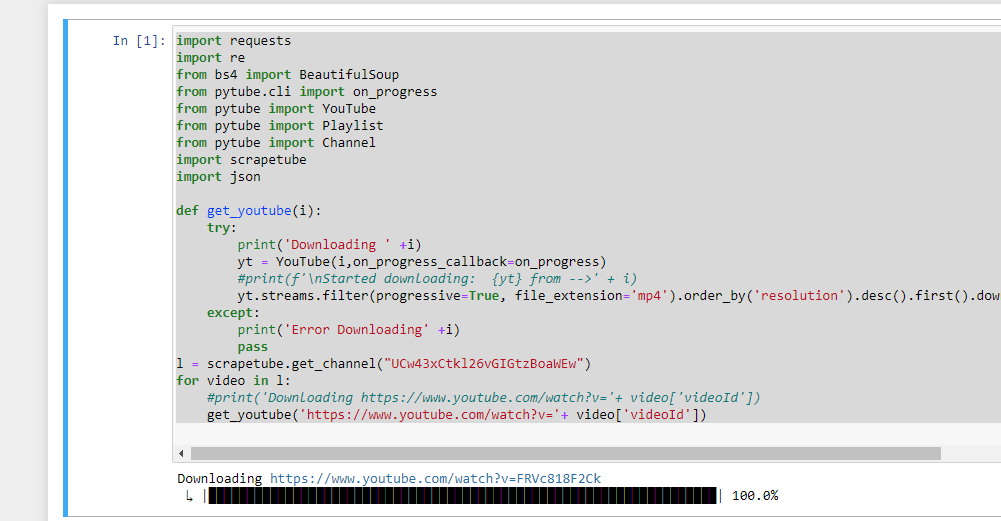

Instead of using Channel from pytube module, to make it easier lets make changes to the original code snippet posted above, using module “scrapetube.get_channel “

import requests

import re

from bs4 import BeautifulSoup

from pytube.cli import on_progress

from pytube import YouTube

from pytube import Playlist

from pytube import Channel

import scrapetube

import json

def get_youtube(i):

try:

print('Downloading ' +i)

yt = YouTube(i,on_progress_callback=on_progress)

#print(f'\nStarted downloading: {yt} from -->' + i)

yt.streams.filter(progressive=True, file_extension='mp4').order_by('resolution').desc().first().download('C:\\Users\\Dell\\Videos\\downs\\Sriram_Channel\\')

except:

print('Error Downloading' +i)

pass

l = scrapetube.get_channel("UCw43xCtkl26vGIGtzBoaWEw")

for video in l:

#print('Downloading https://www.youtube.com/watch?v='+ video['videoId'])

get_youtube('https://www.youtube.com/watch?v='+ video['videoId'])

Hope you like it ! Happy Reading.